Making NPM scripts work cross platform

TLDR; Here are the changes we made to npm scripts #

"scripts": {

- "build": "set ELEVENTY_ENV=prod&& npx @11ty/eleventy",

- "serve": "set ELEVENTY_ENV=dev&& npx @11ty/eleventy --serve",

- "clean": "rm -rf _site"

+ "build": "npx cross-env ELEVENTY_ENV=prod npx @11ty/eleventy",

+ "serve": "npx cross-env ELEVENTY_ENV=dev npx @11ty/eleventy --serve",

+ "clean": "npx rimraf _site"

}

And if you don't want to use npx, you can install cross-env and rimraf globally:

npm i rimraf -g npm i cross-env -g

The Problem #

The fundamental challenge is that whatever string ends up in the script section will get sent directly to whatever shell you've selected. Which means for true cross compatibility, the only safe scripts require syntaxes and commands that perfectly overlap in both cmd and bash - which is a small venn diagram.

Running on the wrong environment will yield something like this:

'rm' is not recognized as an internal or external command, operable program or batch file.

We largely work on Windows machines, but our deployment is handled via Netlify on linux, so it's important there's a functioning script for each environment.

Here's some of the commands we'd like to run and several different implementations across environments to see if we can find something that works universally.

- Setting Environment Variables

- Deleting Folder/Files

- Running Multiple Commands

#1 Setting Environment Variables #

BASH / Linux #

The && operator is optional when setting an environment variable and then running a subsequent command.

MY_NAME=Kyle echo Hi, \'$MY_NAME\'

MY_NAME=Kyle && echo Hi, \'$MY_NAME\'

CMD / Windows #

set MY_NAME=Kyle&& echo Hi '%MY_NAME%'

*Note 1: You cannot have any space between the value being set and the && command operator

*Note 2: For this particular example, the variable expansion will occur immediately, so in order to set and use the variable in the same line you'll need to wrap in cmd evaluation, pass in /V for delayed environment variable expansion, and delimit with ! instead of $. But it should be fine to consume the variable in a subsequent command.

Node.js #

Attach directly to process.env

process.env.MY_NAME = 'Kyle';

NPM CLI #

From How to set environment variables in a cross-platform way, there's a package by Kent C. Dodds called cross-env that allows you to set environment variables across multiple platforms like this:

npx cross-env ELEVENTY_ENV=prod npx @11ty/eleventy

.ENV file #

You can create a file named .env

MY_NAME=Kyle

And then load into the process with a library like dotEnv like this:

require('dotenv').config()

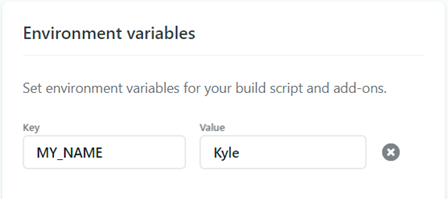

Build Server / Netlify #

You might also set some environment variables on your build server settings like in Netlify Environment Variables:

#2 Delete Files / Folder #

BASH / Linux #

rm with -f force and -r recursive

rm -rf _site

CMD / Windows #

The windows equivalent is rd remove directory with /s to delete all subfolders and /q to not prompt for y/n confirmation

rd /s /q _site

Node.js #

Use fs.rmdir to remove entire directory which will work recursively as of Node v12.10.0

const fs = require('fs');

fs.rmdir("_site", { recursive: true });

NPM CLI #

RimRaf is a recursive delete package that has a CLI which can be used like this:

npx rimraf _site

#3 Running Multiple Commands #

BASH / Linux #

How to run multiple bash commands - Docs on list of commands / control operators

| Syntax | Description |

|---|---|

A ; B |

Run A and then run B |

A && B |

Run A, if it succeeds then run B |

A || B |

Run A, if it fails then run B |

echo hey && echo you

CMD / Windows #

How to run two commands in one cmd line - Docs on redirection and conditional processing symbols

| Syntax | Description |

|---|---|

A & B |

Run A and then run B |

A && B |

Run A, if it succeeds then run B |

A || B |

Run A, if it fails then run B |

echo hey && echo you

NPM CLI #

You can Run npm scripts sequentially or run npm scripts in parallel with a library called npm-run-all

If you had the following scripts in your package.json:

"scripts": {

"lint": "eslint src",

"build": "babel src -o lib"

}

You could run them all like this:

$ npm-run-all -s lint build # sequentially

$ npm-run-all -p lint build # in parallel

Possible Solutions #

Create Node Script #

One escape hatch seems to be to to invoke a script contained in a node.js file - because node is a prerequisite anyway and is cross-OS compatible. However, this also seems pretty heavy handed for a 1 line function to delete a directory. This is similar to the approach taken by Create React App with react-scripts, but they have substantially more complexity to handle.

Another challenge to this approach is when calling CLI / binary commands like eleventy --serve. To do that we need to execute a command line binary with Node.js and also pipe the response to stdout

So it could work a little something like this:

File: Package.json:

"scripts" : {

"build" : "node build.js"

}

File: Build.js

let { exec } = require('child_process');

let log = (err, stdout, stderr) => console.log(stdout)

exec("git config --global user.name", log);

Also, if an OS agnostic node command wouldn't work, you can also use require("os") to get "operating system-related utility methods and properties"

Environment Specific Scripts #

As outlined in package.json with OS specific script, there's a library called run-script-os that helps automagically toggle between named script versions

So if we had the following scripts setup for each supported environment:

"scripts": {

"build": "npx run-script-os",

"build:windows": "SET ELEVENTY_ENV=prod&& npx @11ty/eleventy",

"build:default": "export ELEVENTY_ENV=prod && npx @11ty/eleventy"

}

You could manually run with the fully qualified name like this:

$ npm run build:windows

Or you could let run-script-os decide for you:

$ npm run build

If you have even 3-4 scripts in your package.json, this probably starts cluttering them up with 9-12 different variations, but is still relatively lightweight and sets the correct syntax for each environment.

Use NPM CLI packages #

Leveraging NPM packages to enhance and supplant regular terminal commands gives us a platform agnostic way to call functionality that's running node under the hood. For maximum portability, I'm a fan of using npx to lower the onboarding cost of someone joining a project and installing dependencies. But once folks are brought up to speed, they can still do a global install of any command line packages for local performance.

At the potential cost of additional dependencies (none of which are felt by the end client), this probably gives us a solution that is both robust & terse as long as a CLI exists for what you're trying to do...

Spoiler! There's probably an NPM package for it

This is the approach we're currently using for this site